Using AI in the classroom: A cheat code or a tool?

AI is reshaping classrooms, particularly in language learning. While excessive dependence can undermine authentic learning, thoughtful integration allows students to personalize their practice, receive instant feedback, and develop new skills.

Rather than banning AI, educators are increasingly focusing on guiding its ethical and effective use. They’re establishing clear boundaries, designing tasks that encourage original thinking, and teaching students how to write effective prompts—ensuring that AI becomes a valuable learning aid, not just a shortcut.

Hannah had two back-to-back university deadlines while battling COVID. Stressed and desperate to submit the work, she turned to AI to help write one of her assignments. Her teacher discovered the ruse through an AI detector and alerted the university. In the end, Hannah received only a warning, but after much anxiety over possible expulsion, she regrets her choice since it severely tainted her first year at uni.

This is the reality teachers now face; the rise of AI in student work forces educators to confront questions about learning, integrity and student development. How can AI be used as an aid without enabling cheating? Should we accept this technology as inevitable? As we weigh these questions, teachers are already adapting. In the U.S., for example, some schools are replacing essays with project-based learning to avoid overreliance on AI.

And what about language learning? Can we also integrate AI responsibly here? What are the challenges and opportunities? Let’s explore these debates together.

- AI in education: Here to stay

- Misusing AI: When it hurts more than it helps

- Is it cheating or progress?

- Ground rules: Principles for ethical AI use

- How teachers can use AI well: Practical strategies that work

- Pushbacks and challenges

AI in education: Here to stay

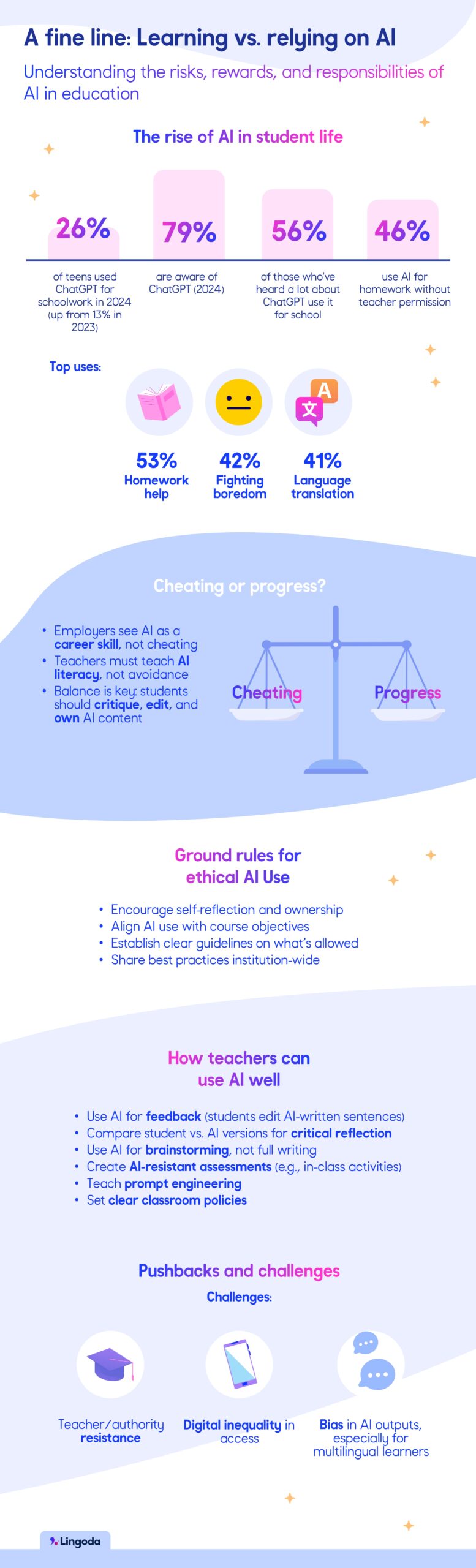

AI use in student work is here to stay, as shown in this U.S.-based data:

- The share of teens who admitted to using ChatGPT for their schoolwork increased from 13% in 2023 to 26% in 2024

- 79% of teens reported being aware of ChatGPT in 2024, up from 67% in 2023

- Among those those who say they’ve heard ‘a lot’ about ChatGPT (32% in 2024), 56% report using it for schoolwork, and 79% believe its use for school is acceptable

- Two in five teens have used generative AI to help with homework, with 46% doing so without teacher permission (2024)

- Teens primarily use AI for homework help (53%), to fight boredom (42%) and for language translation (41%) (2024)

These numbers reveal how students are approaching AI with increasing normalcy and ease. This creates challenges for educators who want to prevent misuse and continue to teach students problem-solving and social skills.

However, the rapid growth and accessibility of AI make it difficult to implement measures like blanket bans. Teachers must, then, take an active role in helping students recognize the pitfalls of relying on AI, guiding them to view it as a supportive tool only and adapting to this new landscape.

Misusing AI: When it hurts more than it helps

Using AI for language learning can have great benefits. For example, teachers can use AI tools like voice generators, chatbots and speech recognition to personalize their students’ experience, provide real-team feedback and create a more immersive environment. Additionally, students living in isolated areas or who can’t access lessons can use AI-powered learning apps to bridge these gaps.

However, misusing this technology will inevitably undermine authentic language learning. Let’s take a look at some of these drawbacks:

- Using AI as a crutch: Overrelying on AI hinders our ability to learn from our mistakes and develop fluency. Challenges and friction are what pushes us to strengthen our problem-solving skills

- AI is still learning: It can generate incorrect information or unnatural phrasing, leaving students confused and with delayed progress

- Can lead to lack of fluency: AI produces repetitive language and structures, so students don’t practice variety and context-based language

- Biases: GPT detectors frequently misclassify non-native English writing as AI plagiarism, which can disenfranchise non-native English speakers in educational contexts and in search engine results

Is it cheating or progress?

While we can’t ignore that AI presents new opportunities for plagiarism, AI literacy is quickly becoming an in-demand skill in the labor market. This means that just as teachers once adapted to the use of calculators and search engines, they must now also embrace AI as a tool that can strengthen their students’ learning and career prospects.

So a point of tension emerges: to what extent should educators teach AI, and when should they curb its use? AI isn’t going anywhere, and while teachers may see it as a tool for cheating, companies see it as a means for efficiency and profit maximization. This is why it’s important to integrate AI into the curriculum. Ignoring it could leave students unprepared for jobs where AI literacy is expected. Teachers should guide students to critically evaluate AI-generated content, take ownership of the final work and use AI to their advantage while still engaging in a cognitive challenge.

Additionally, taking the time to acknowledge ethical discussions surrounding AI, such as privacy, bias and environmental concerns, is also crucial. In this way, we can move towards responsible AI usage that platforms our capabilities.

Ground rules: Principles for ethical AI use

Responsible AI use in education starts with agreed principles and transparent classroom policies. The following could be guiding starting points:

- Core values: Educators should encourage students to reflect on how they learn, and when and why they should use AI, while being responsible for their own work. Open conversations foster trust and transparency, which can help students feel comfortable disclosing AI use.

- Align AI use with course goals: Define when and why AI fits and supports the learning objectives. In language learning, for example, tutors should make sure AI use aligns with CEFR language levels.

- Sharing best practices: Institutions should create spaces for collaboration and research on the equitable and effective use of AI in education. This will help build guidelines and training programs. Plus, sharing best practices across stakeholders and researches can help identify where AI is beneficial and where it’s limiting

How teachers can use AI well: Practical strategies that work

Using AI in language learning can make the learning experience more personalized and efficient. So, how can we use AI to support students? Here are some ideas.

AI as a feedback partner

To reinforce the writing process, students can prompt an AI model to generate some sentences in the target language. Then, they can revise the content, make suggestions and identify mistakes. This will help them improve their grammar, vocabulary and editing skills.

Scaffold prompting and comparisons

Another effective way to get language students to improve their critical thinking and language skills is to have them write their own content first and then compare it with AI suggestions. This will encourage a personalized reflection on tone and grammar.

Use AI for brainstorming and vocabulary support

Language tutors can encourage students to use generative AI to help with idea generation and vocabulary support, like finding synonyms or collocations, all while discouraging them from using it to complete entire assignments.

Create AI-resistant assessments

It’s also beneficial to design AI-resistant assignments that encourage students to immerse themselves in the language in a challenging way. Activities such as in-class writing and listening or speaking exercises are good options.

Teach prompt literacy

Knowing how to write clear and precise instructions for LLMs will help students get more useful outputs. A good prompt should have a goal, context and specific requirements for the task.

Define clear classroom AI policies

It’s paramount to establish and communicate clear boundaries for acceptable AI use. This will build trust, transparency and accountability.

Pushbacks and challenges

Despite the advantages of using AI in education, teachers might still face pushback and barriers to adopting it in the classroom, such as:

- Resistance from other teachers or authorities: Change means adapting to new standards, guidelines and technologies, often without full certainty about the results. This can be overwhelming, particularly for those who lack training.

- Debates on equality: Unequal access to devices, training and connectivity creates gaps among groups with different socioeconomic and technological expertise backgrounds, preventing some communities from adopting new technology.

- Biases in AI: AI models can reproduce biased data when generating results. This can impact multilingual learners, who may be incorrectly flagged for plagiarism.

From ‘either/or’ to ‘both/and’

The rapid growth of AI has put educators at a crossroads: should this technology be embraced or thwarted? Given how widespread AI has become, resisting it entirely will be futile. Instead, the question should be: how can we use AI to support learning while preventing overreliance and dishonesty? By setting clear goals and ethical guidelines, and remaining mindful of the pitfalls, teachers can experiment with AI to help students develop their skills more efficiently. In this way, AI can ultimately become a valuable educational tool and not just a shortcut.

Begin your personal language journey

- Courses tailored to your learning needs

- Qualified teachers, small class sizes

- Expert-designed curriculum

- Live classes with native-level teachers